2023. 4. 7. 21:31ㆍ_Study/AI

End-to-end ML project🐇¸.•*¨*•¸.•*¨*•¸.•*¨*•¸.•*¨*•

해당 자료는 강의 학습자료입니다. 강의 이외의 내용은 검색 및 다양한 자료를 통해 공부하며 정리한 내용의 포스팅입니다.

#AI #인공지능 #기계학습과인식 #chatgpt #python #study #0407

Main steps for end-to-end ML project (1~4 about data)

1. Look at the big picture.

2. Get the data.

3. Discover and visualize the data to gain insights.

4. Prepare the data for Machine Learning algorithms.

5. Select a model and train it.

6. Fine-tune your model.

7. Present your solution.

8. Launch, monitor, and maintain your system.

기계 학습이 어떤 과정을 통해서 이루어지는지 알아보자.

기계학습의 과정

1. Frame the Problem (superviesed, unsupervised, reinforcement learning)

문제를 받았으면 꼼꼼히 물어봐야한다. 비즈니스의 목적이 무엇인지 해당 모델의 궁극적인 목적이 무엇인지 세부적으로 깐깐하게 정의해야한다.

지도학습? 비지도학습? 강화학습 어느 것을 사용해야 할지?

분류? 회귀학습인지? 무슨 방법을 사용해야할지 생각해본다.

다음 단계는 성능 측정을 위해 선택하는 것이다.

2. Get thd data.

총 4가지의 단계로 구성할 수 있다.

2.1. Create Workspace

2.2. Download thd data.

2.3. Take a Quick look at the data structure.

2.4. Create a Test set

어디서 할 것인지 워크스페이스를 정해서 데이터를 가져온 후 구조를 파악하고 테스트 셋을 만든다.

csv_path를 return 받아서 연결함수를 만들어준다.

.head()

.describe() 와 같은 함수로 데이터 구조를 살펴본다.

일정 비율로 train과 test를 나누어주는 함수를 만든다. 이때 특정 domain의 전문가와 함께 어떤 변수가 데이터에 중요한지 정해야한다.

3. Discover and visualize the data to gain insights.

이때 데이터를 읽어내는 능력, 데이터 리터리시가 중요할 수 있다. 예를 들어 대각선으로 올라가거나 내려가는 방향이 있다면 두 변수는 상관관계가 있다고 할 수 있다.

4. Prepare thd data for ML algorithms.

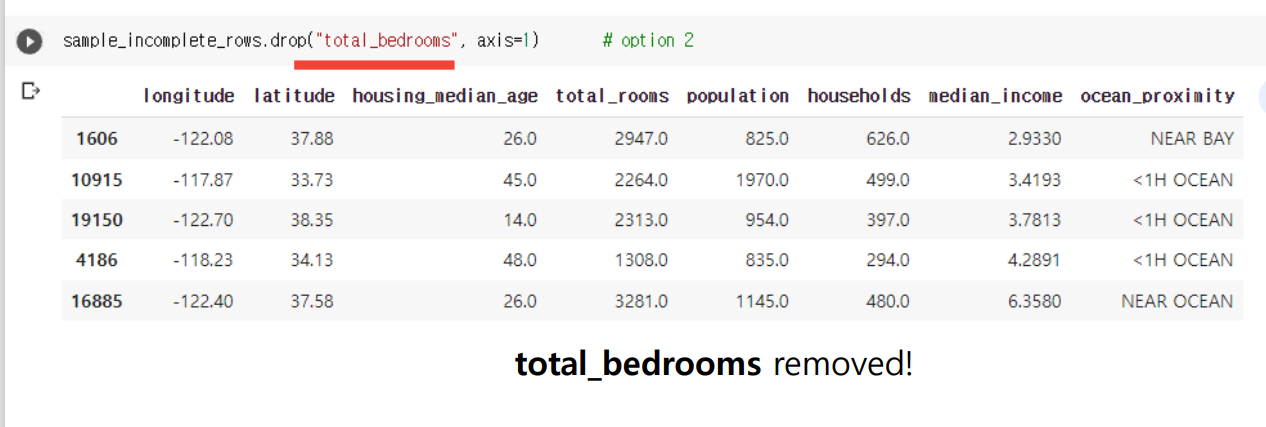

Data Cleaning 데이터 전처리

필요없는 데이터를 제거한다. 그리고 카테코리를 문자열이나 숫자, 정수데이터, 실수데이터로 변환하여 Coverting = Encoding한다. 즉, 기계학습에 도중에 타입관련 오류가 발생하지 않도록 타입을 모두 변환및 통일시킨다.

Feature Scaling (transformation)

문제 발생: 방의 개수가 6~39320 중앙값의 범위가 0~15이다.

이러한 속성을 해결하기 위한 공통적인 방법이 두가지가 있다.

- min-max scaling ( normalization, end up ranging from 0 to 1) : 0~1사이에 오도록 정규화하는 방법

모든 값을 가장 큰 값으로 나누어 스케일링하는 방법

- standardization (unit varance, not bounded)

평균과 표준편차를 사용하여 스케일링하는 방법. 표준 정규 분포로 변환할수 있고, min-max scaling과 달리 범위가 정해져있지 않으며 특히 이상치가 있는 데이터에 대해서는 더욱 견고하게 성능을 유지한다.(robust)

5. Select a model and train it

Linear Regression : 선형 회귀 방식은 입력변수X와 출력 변수Y 사이의 선형 관계를 모델링하는 기계 학습 알고리즘이다.

from ~~ import 모델

내 모델 = 모델에 해당하는 함수()

내 모델.fit(train, validation) // 모델을 학습 시키는 코드

내 모델.predict(test) // 새로운 입력에 대한 출력을 예측하는 코드

더 좋은 모델을 찾아가야한다.

6. Fine-tune your model

무슨 모델을 사용할지? parameter는 뭘 할지 정하는 단계이다.

1. Grid Search

장점 : 알아서 더 좋은 파라미터를 찾아준다.

단점 : 오래걸린다.

2. Randomized Search

너무 hyperparemeter가 많은 경우. 이것을 사용해보자.

3. Ensemble methods (합치기)

Try to combine the models that perform best.

The group will often perform better than the best individual model.

8. Launch, monitor, and maintain your system.

deployment is not the end of the story. : 계속 발전시키기

정리하면 아래와 같다. 순서를 잘 기억해두고 적용해보자.

'_Study > AI' 카테고리의 다른 글

| [인공지능] RNN Recurrent Neural Network 2 (0) | 2023.04.12 |

|---|---|

| [인공지능] RNN 시계열 데이터와 순환 신경망 (1) | 2023.04.07 |

| [인공지능] chatgpt를 활용한 기계 학습과 인식2 #AI #SVC #SVM #chatgpt (0) | 2023.04.05 |

| [인공지능] chatgpt를 활용한 기계 학습과 인식1 #AI #SVC #SVM #chatgpt (0) | 2023.04.05 |

| [인공지능] CNN(Convolution neural network)2 #CNN (0) | 2023.04.05 |